Why Do Security Engineers Still Rely on Manual Reviews Despite an AppSec Toolkit with Dozens of Tools?

Table of Contents

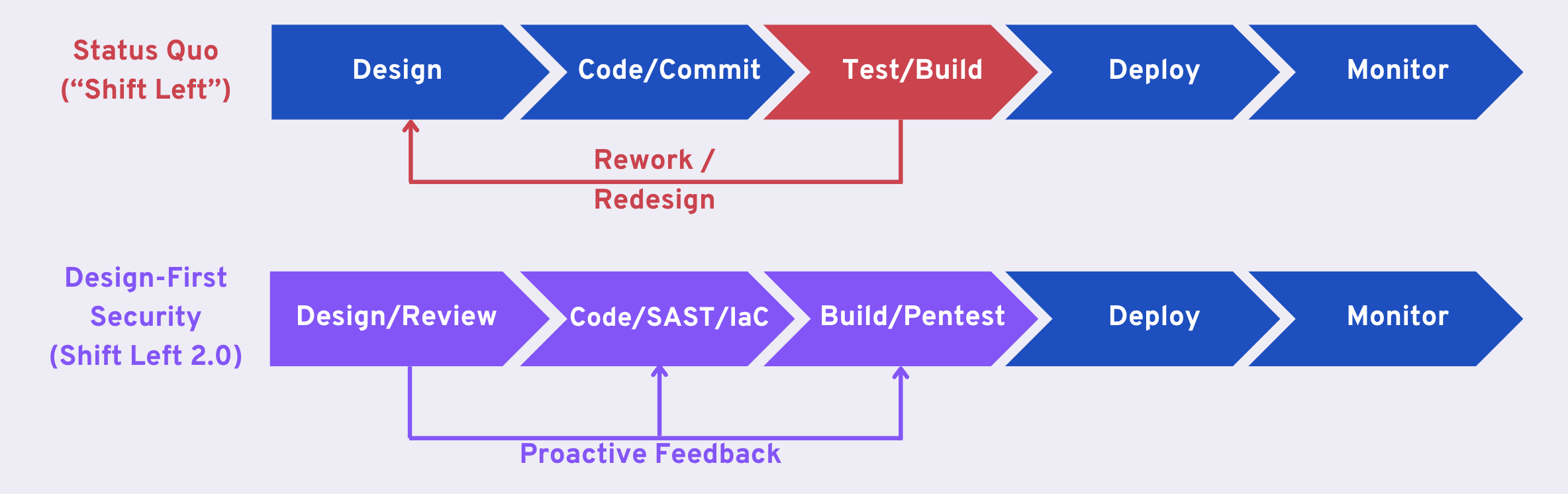

Despite modern AppSec tools promising automated vulnerability management, security engineers still rely on manual code review to identify a significant share of vulnerabilities. This article discusses different types of vulnerabilities and why existing tools fail to identify some of them. In particular, we posit that existing tools were designed to assist in isolated steps of the security engineering workflows, and none have been built with a “design-first” approach that is required to identify complex vulnerabilities.

Design-first and threat-modeling approaches incorporate a unified view of security into security engineering processes to target the security reviews and remediation efforts to specific threats most relevant to the application and business. Because threat modeling requires a logical understanding of the code and business risk beyond the capabilities of existing tools, this process is still largely manual. Recently, large language models have shown promise in code comprehension and processing disparate, unstructured data. This opens a new set of opportunities to build the next generation of AppSec tools.

We believe that the next generation of AppSec tools will:

- Utilize AI to enhance code comprehension and incorporate business logic in security reviews.

- Leverage the threat model to target the security reviews to specific threats most relevant to the application and business.

- Automate design reviews to identify logical flaws as early as possible.

Security Vulnerabilities: The Role of Implementation vs Logic

At a high level, security vulnerabilities can be traced back to two distinct origins: implementation and logical flaws.

Implementation Flaws

An implementation flaw is a weakness in the code that a threat actor can exploit to gain unauthorized access or perform unauthorized actions. Implementation flaws include buffer overflows, SQL injection, unpatched software, weak passwords, misconfigured security settings, and outdated protocols.

Logical Flaws

A security logical flaw refers to an error in an application’s logic or design that can lead to security vulnerabilities. These flaws often result from incorrect assumptions made during the design phase or logical errors in how the application handles specific processes or business logic. For example, a vulnerable library used for authentication might allow someone to craft a malicious query, or a pricing database page may be accidentally navigable with a permissive role assigned to end users.

Identification Methods

Vulnerability detection requires a combination of automated tools and manual reviews. Security engineers use static code analyzers (SAST) tools such as Snyk, Semgrep, and Veracode to identify security issues. These tools are most effective at finding problems that can be identified by analyzing small chunks of the code. For instance, code scanners can automatically detect a code injection issue by deducing an entrypoint of a service, and then performing a local search to see whether the application sanitizes the user input or not.

However, some security issues require a deeper understanding of the code. For example, identifying issues that may occur between microservices or with imported packages from internally built libraries are common problems that require manual review, because SAST tools are localized to the local repo context and don’t incorporate business logic in their review. As a result, security engineers perform manual code reviews or rely on penetration testing, red-team efforts, or bug bounty findings to search for logical flaws.

Security professionals use other tools, such as Dynamic Application Security Testing (DAST) or Interactive Application Security Test (IAST). Such tools can help identify issues manifest only under specific conditions during execution in production or production-like environments. The main limitation of DAST / IAST tools is that they only work during the later stages of development and find issues that might require significant rework if discovered late in the development cycle.

Mitigation, Prevention, and Remediation Strategies

Implementation flaws are mitigated by following secure coding practices, regular code reviews, patch management, and using security frameworks and libraries.

Logical issues often result from design decisions made early in the development pipeline. To identify such design flaws, security engineers must engage in early design conversations with the engineering team, build a threat model, and provide feedback on the design. Remediating logical flaws requires changes in the application’s design or logic, which can involve rethinking workflows, redesigning certain features, and implementing more robust business logic checks.

The table below summarizes the distinction between implementation and logical flaws and how approaches to investigating and identifying them differ.

Examples

The distinction between implementation and logical flaws can be best understood through examples. The table below lists some of the most common security vulnerabilities and whether they result from implementation or logical flaws.

Challenges in Automating Manual Security Reviews

Now that the distinction between logical and implementation flaws is clear, we can discuss why logical flaws are fundamentally challenging to identify automatically without manual human reviews.

Lack of universal patterns for logical flaws

Automated code scanners can identify implementation flaws as long as the security teams can describe the code patterns associated with those vulnerabilities. However, many security issues are due to logical flaws in how an application architecture is designed or interacts with third-party data sources. These issues are highly dependent on the business risk and context, and it is almost impossible to develop a list that applies universally.

To perform a targeted search, human reviewers identify logical flaws by starting with the threat model to filter down the classes of problems they are looking for. None of the existing tools take a design-first or threat-model approach, which makes it challenging to define vulnerability patterns for them.

Disparate, unstructured sources of information

Identifying logical flaws requires a deeper understanding of the application’s business logic, risks, and threat model. This information is either not readily available or is stored in disparate, unstructured sources, making it challenging to build an automated pipeline to process it.

AppSec tools need to process and incorporate data sources such as threat models, security policies, and compliance requirements to enable automated identification of logical flaws. Processing the natural language of such documents and integrating that with a deterministic process (code scanning) has been a technical challenge.

The Path to Automation

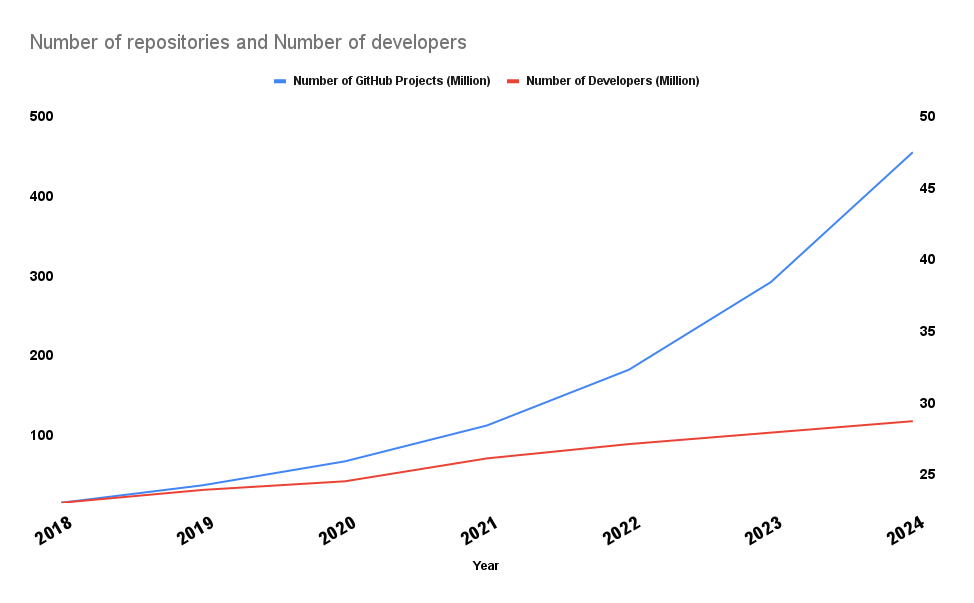

It is impossible to continue to perform manual code reviews, due to several confounding trends:

- Exponential growth in the speed of new code generation, super-charged by the adoption of coding assistants.

- The growing complexity of programming frameworks makes it difficult for security engineers to stay on top of the trends. This reduces the depth and breadth of the manual code review coverage, resulting in increased risk of security issues.

- Changing fabric of development teams, such as offshore or fully remote teams, make it more difficult for security engineers and developers to meet in real-time to understand and review the code. This results in lost context and longer review processes.

To identify logical flaws, we need:

- A logical understanding of the code beyond the syntactic and semantic knowledge, i.e., a design-first analysis.

- A contextual understanding of security threats and business risks, i.e., a threat model.

A Hybrid Approach to Code and Logic Comprehension

Automated code scanners cannot identify logical flaws; instead, they rely on humans to process unstructured data and translate business requirements to rule sets. However, language models have opened a new world of possibilities for analyzing unstructured data and creating a better understanding of the codebase. Some studies suggest that LLMs are better than humans in identifying logical flaws in the code, while others have warned against blindly using LLMs for code logical comprehension. The right approach will be a combination of using language models and deterministic tools such as abstract syntax trees (AST), control flow graphs (CFG), and call graphs (CG).

Using Threat Model to Inform the Analysis

A threat model formalizes the business risks and security requirements, which helps target the analysis and prioritize the remediation of logical flaws. I have discussed the role of the threat model in previous posts.

Design-First Security

Logical flaws are best identified and fixed during the design phase. Automated design reviews and feedback can help prevent logical flaws before any line of code is written. One challenge with design review automation is the lack of a standard process for development teams to formalize and document their designs. Different teams store their design information in different platforms. In some cases there are no design documents to start with. This makes the role of integration more important. We plan to write about this space in a future blog post.

Summary

The problem with modern security practices is a lack of a “unified theory of code security” to inform AppSec programs based on an organization’s specific needs. Existing AppSec tools are small point solutions that automate isolated tasks for security engineers. These tools help security engineers chase vulnerabilities but fail to model an organization’s specific security needs and determine what rules to create. Security engineers are left with dozens of tools but still spend significant time finding and chasing vulnerabilities.

One critical insight on the path to AppSec automation is that there are different classes of vulnerabilities, and some classes are more challenging to identify with existing tools automatically. Specifically, security engineers spend a significant amount of their time manually identifying and fixing logical flaws that are caused by incorrect reasoning about design decisions or business logic or wrong assumptions about other components, microservices, or internal libraries. Identifying these “logical flaws” requires a logical understanding of the code beyond the syntactic and semantic knowledge, as well as a contextual understanding of security threats and business risks.

To identify logical flaws, we need a design-first and threat-model approach. But automating this process has been challenged by a lack of universal patterns for logical flaws, disparate and sparse documentation, and unstructured sources of information. Recently, large language models have shown promise in processing unstructured data and code comprehension. More research is needed to explore the efficacy of language models in identifying and fixing vulnerabilities, but the final solution will likely be a hybrid approach that leverages LLM unique capabilities.

In short, automating AppSec workflows requires a combination of the following:

- Utilizing the latest AI advancements to enhance code comprehension and processing of disparate, unstructured data.

- Leveraging the threat model to target the security reviews to specific threats most relevant to the application and business.

- Automating design reviews to identify logical flaws as early as possible.

Acknowledgements

I want to thank James Berthoty of Latio Tech, Ian Livingstone, and Branden Dunbar for reviewing early drafts of this article.

Table of Contents

Subscribe