Secure Design Is the New Front Line of AppSec

Table of Contents

The future of AppSec isn't about chasing bugs or triaging alerts. It's about capturing intent, governing design, and enabling every contributor (human or AI) to build securely by default.

In the age of AI-generated code and vibe coding, secure-by-design is no longer optional. It’s foundational.

AI-Native IDEs such as Cursor and Windsurf, integrated build & deploy platforms such as Vercel, chat-interface product platforms such as Lovable, and CI/CD processes continue to improve. As a result, product and design teams are pushing code straight into GitHub and live prototyping features in the production environment. AI IDEs are generating both tickets and code, and dev velocity now far outpaces what security teams can track. AppSec can’t keep up, unless it evolves.

In this article, I break down the core threats of this new era and how we can bring security into the AI-native development stack.

Reactive Security Fails in a World Where Code Is Being Written Faster Than It Can Be Reviewed

AI-native development tools have become the mainstream. They’re not just assistive IDEs; they’re becoming the main drivers of development workflows, both for internal and external apps.

This shift boosts productivity but exposes the cracks in today’s AppSec model. Traditional scanners are reactive, overwhelmed, and too late in the process, especially when AI can ship more code in an hour than humans can review in a week.

Detection isn’t enough. We need proactive, secure-by-design systems that make it hard to write insecure code in the first place, without slowing down innovation. It’s not about building smarter scanners. It’s time to break the cycle of patching and shift security earlier, where it can actually keep up.

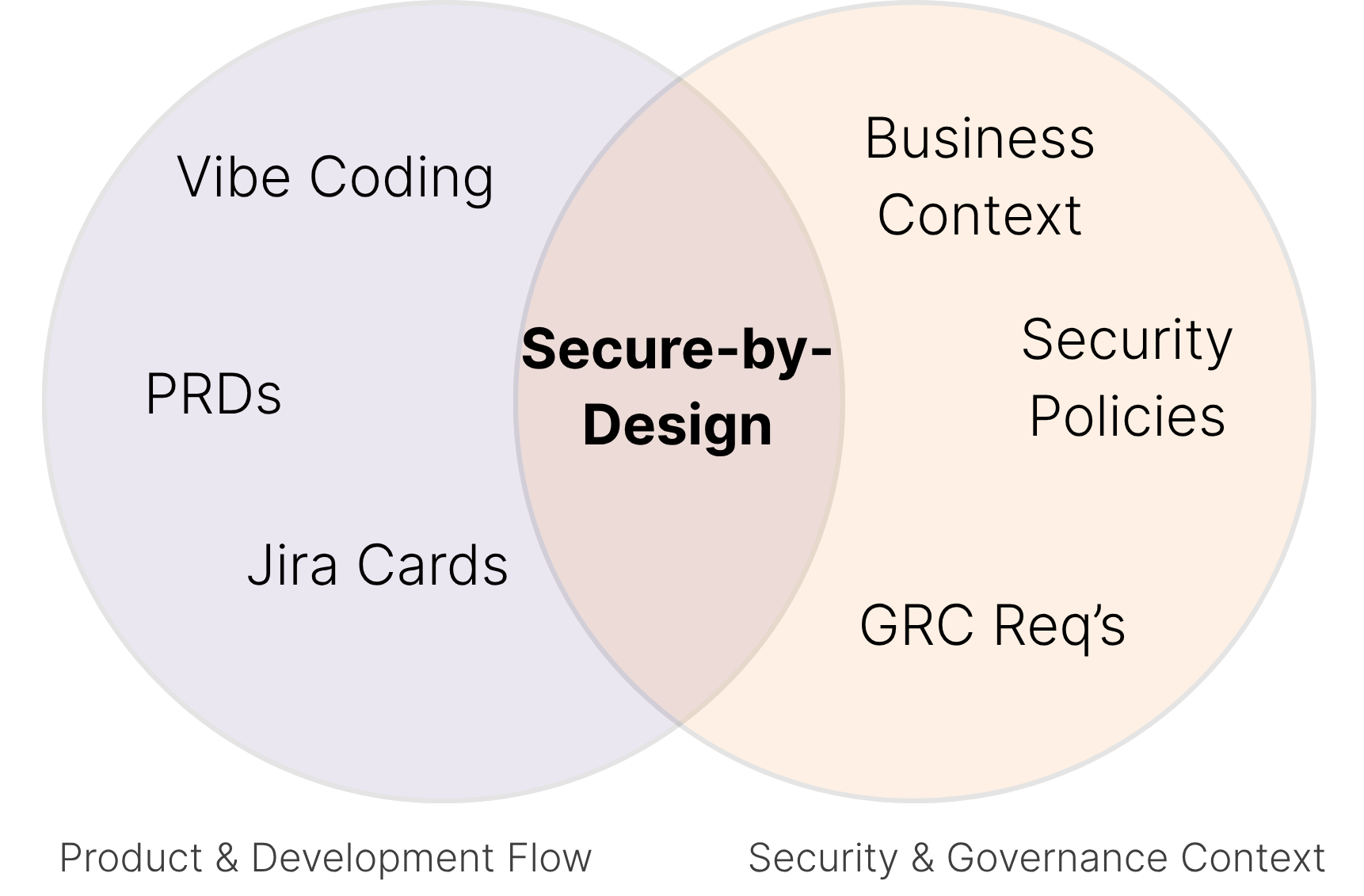

Security Context Lives Outside the Editor

By the time the code is being written, it’s already too late to influence the architecture, enforce security requirements, or validate that critical risks were considered upfront.

AI-native IDEs can now generate full apps from Figma files and Jira tickets, But, they often move faster than teams can think about architecture, let alone threat models.

Many assume these tools will “learn” security over time. But secure development isn’t just in the code; it’s in the organization. LLMs can’t infer business context, threat models, or adversary behavior from scattered, undocumented knowledge.

As one CISO recently told me, moving from FinTech to social media completely changed his threat landscape—from fraud and insider risk to misinformation and nation-state ops. Same role, entirely different risks.

When you code with tools like Lovable, you can expect they’ll eventually be good enough to automatically tell you how to mitigate a DDoS. But they won’t tell you if DDoS is even the threat you should be caring about in the first place. That part still needs a deep understanding of the business, the adversaries, and the mission.

This classic “threat-driven” model illustrates why secure development decisions can’t come from code alone; the real context lives in business logic, adversary modeling, and operational workflows that lie outside the IDE.

Giving AI code generators safe boundaries will supercharge SDLC

AI coding tools aren’t just writing code. They’re shaping architecture, and with it, defining how risk enters a system.

To build safely at this new velocity, we need systems that:

- Extract security requirements from early designs

- Validate intent before implementation

- Enforce secure patterns, policies, and controls throughout development

It’s not about slowing AI down; it’s about giving it a paved road. Guardrails catch mistakes. Paved roads prevent them. That’s the power of secure design and repeatable patterns.

The Future: A Layered Model for AI-Native AppSec

We’re moving toward a world where security is not a gate, but a system of composable, collaborative layers:

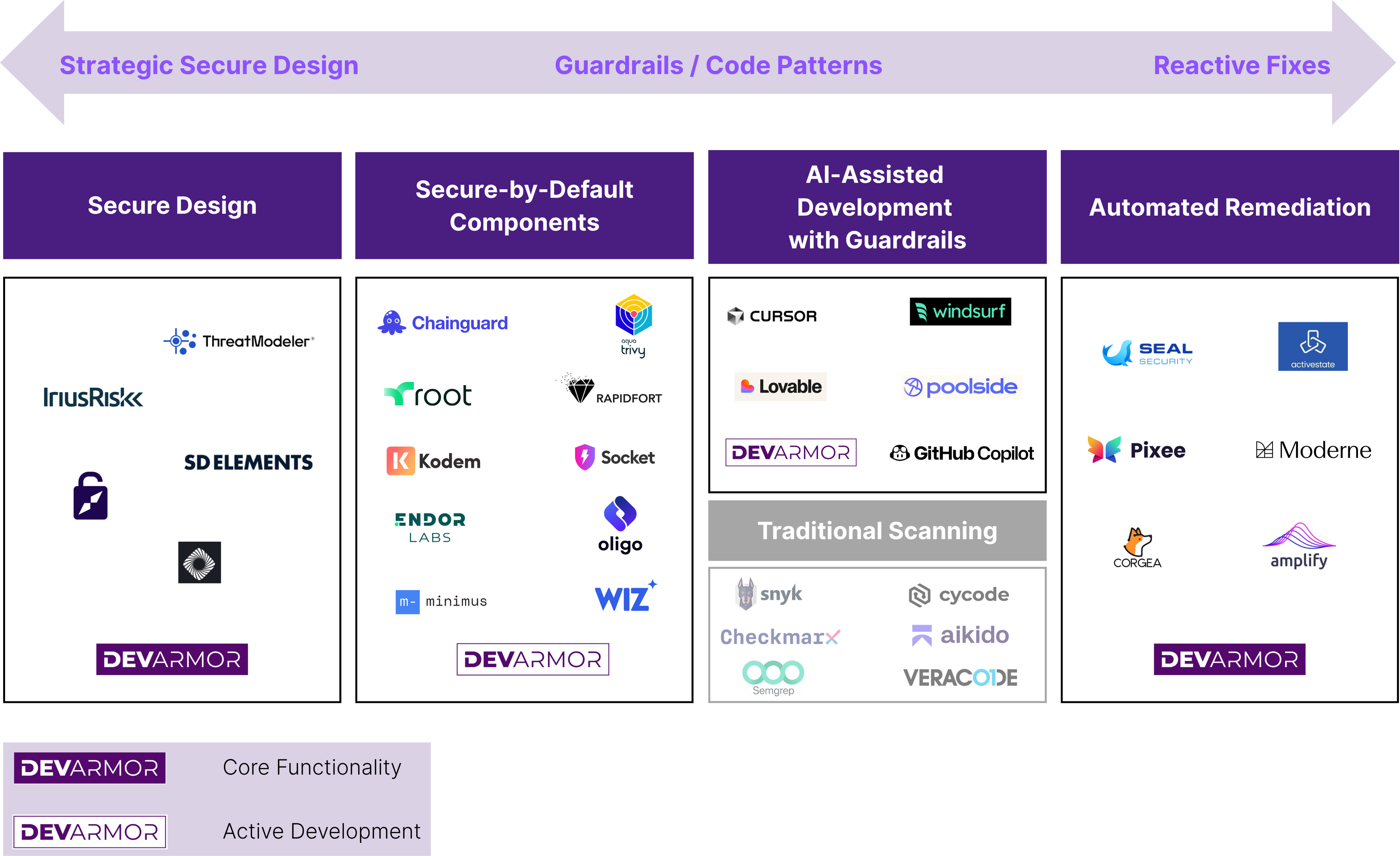

1. Secure Design

Proactive security starts before code is written. Legacy tools like IriusRisk, ThreatModeler, and SD Elements offer structured frameworks for threat modeling, but they’re often manual and disconnected from real workflows.

2. Secure-by-Default Components

These tools secure the building blocks of modern software—open source libraries, containers, and third-party packages—by analyzing dependencies and enforcing trust boundaries. While traditional SCA tools crowd the space, newer approaches like Oligo, Socket, Endor Labs, and Kodem use a variety of reachability methods, both static and runtime, to reduce false positives. Solutions like Chainguard, Minimums and Root are pushing “secure-by-default” infrastructure with hardened, policy-backed components.

3. AI-Assisted Development with Guardrails

This emerging category enhances developer productivity with generative AI, while embedding secure-by-default patterns and guardrails into code generation. As developers increasingly spend more time perfecting their prompt rather than writing code (e.g., vibe coding), security tools need to be embedded in the AI prompt or rule sets for AI IDEs.

4. Automated Remediation

These platforms focus on identifying issues and fixing them automatically or with minimal developer intervention. From refactoring insecure code to managing patch workflows, the goal here is to accelerate secure delivery at scale.

Automated code improvement in modern AppSec falls into three buckets: full-app refactoring (e.g., Moderne), patching insecure dependencies (e.g., Seal Security, ActiveState), and targeted fixes for first-party code based on scan results (e.g., Corgea, Pixee). These tools help teams stay secure without slowing down development.

We published a similar map in early 2024, but this updated version leaves out traditional ASPMs and code scanners. Some incumbent vendors in this space offer early combinations of these functionalities (example: Semgrep), but many are still in “wait and see” mode. What we’re seeing now is a squeeze on traditional static scanners: AI-native development is rapidly disrupting the way code is written and reviewed. As a result, scanners are either being pulled further left into design-time modeling, or pushed right into validation and testing phases. Our updated AppSec map aims to reflect where we see AppSec is going, not where it’s been.

The New AppSec Mandate

Vulnerability management consistently ranks as the worst problem in AppSec. The real question: how fast can we shift from chasing vulnerabilities to building with intent?

AI has transformed software development, and security leaders must now design for risk, not just manage it. The age of reactive security is ending; the future is proactive, continuous, and built into the design process.

Traditional guardrails struggled not because we couldn’t find vulnerabilities, but because fixing them at scale was a massive lift. Take SQL injection: once discovered, remediation meant mapping exposure across stacks, teams, and custom codebases. That’s months of document writing, stakeholder syncing, and patching before a single line gets fixed.

And that’s exactly the point: relying on detection-first models means we’ve already failed. Vulnerability management consistently ranks as the weakest link in AppSec; not because of a lack of tools, but because they catch issues too late, after the damage is already done.

True secure-by-design means embedding security into the design phase, before a single line of code is written. As AI accelerates everything downstream, this shift isn’t optional anymore; it’s urgent. A new AppSec wave is rising to meet this need.

Why we are excited about this change at DevArmor

The future of AppSec isn't about chasing bugs or triaging alerts. It's about capturing intent, governing design, and enabling every contributor (human or AI) to build securely by default.

As AI-native tools take over implementation, the biggest risks are shifting upstream, from insecure code to insecure design. That means AppSec must shift too: away from scanning and patching, toward design governance and architectural control.

DevArmor is a first-mile innovation, not a last-mile patch.

While most tools focus on tactical fixes, DevArmor empowers teams to start secure: automating threat modeling, validating design decisions, and enforcing policies before code is written.

We help security and engineering teams:

- Generate threat models from specs, diagrams, and tickets

- Catch design flaws before a single line of code

- Enforce policies across GitHub, CI/CD, and IaC, without relying on tribal knowledge

- Provide secure-by-default building blocks that both AI and humans can use safely

If you’re building your AppSec stack for the AI era, let’s talk.

Acknowledgements

I want to sincerely thank Rami McCarthy, James Berthoty, Frank Wang, and Coleen Coolidge for their feedback on drafts of this article.

Table of Contents

Subscribe